Next: Bibliography

Up: Image Compression Using Transformations

Previous: Experimental Results

From the curves we can see that in general with higher average rate for each

pixel, the PSNR of the reconstructed image gets larger. This makes sense

since when we represent the images with more bits, the images

are better reconstructed in terms of PSNR and the visual performance

as well. (See Figure ![[*]](cross_ref_motif.gif) ).

In our experiments this fact is proved by our three different algorithms.

However, we still can see some jumps in the curves. The main reason

for this is that using more bits does

not necessarily mean a better reconstruction, since reconstruction is

highly related to how we quantize our transformed data to get our bit

stream. If we design a wrong quantization table, such as assigning

more bits for small coefficients and less bits for

large coefficients, then the reconstruction will be very

much dependent the length of the bits stream.

Although we believe that most of the time this design of the quantization tables

is reasonable, the quantization mask does not necessarily

match the block information for every block to be encoded. For EZW algorithm, we have a

similar explanation. For example, if we uniformly quantize 15 in the

range of 0 and 32 with one quantization level, then the reconstruction

value is 16, which is a good approximation. If we quantize

it in the same range with two level quantization, a better reconstruction

value should be obtained, however, what we get is 8, which is an even

worse approximation. This is the reason why in the EZW algorithm

with more bits, meaning more level quantization, we don't necessarily

get better reconstruction.

).

In our experiments this fact is proved by our three different algorithms.

However, we still can see some jumps in the curves. The main reason

for this is that using more bits does

not necessarily mean a better reconstruction, since reconstruction is

highly related to how we quantize our transformed data to get our bit

stream. If we design a wrong quantization table, such as assigning

more bits for small coefficients and less bits for

large coefficients, then the reconstruction will be very

much dependent the length of the bits stream.

Although we believe that most of the time this design of the quantization tables

is reasonable, the quantization mask does not necessarily

match the block information for every block to be encoded. For EZW algorithm, we have a

similar explanation. For example, if we uniformly quantize 15 in the

range of 0 and 32 with one quantization level, then the reconstruction

value is 16, which is a good approximation. If we quantize

it in the same range with two level quantization, a better reconstruction

value should be obtained, however, what we get is 8, which is an even

worse approximation. This is the reason why in the EZW algorithm

with more bits, meaning more level quantization, we don't necessarily

get better reconstruction.

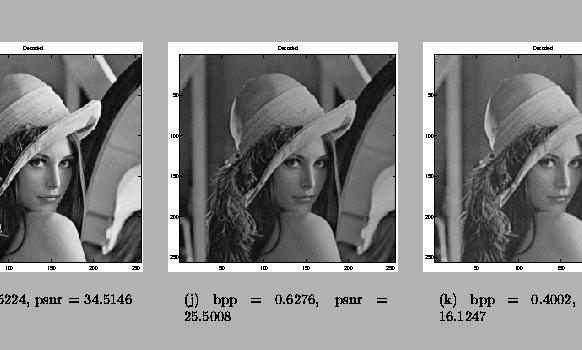

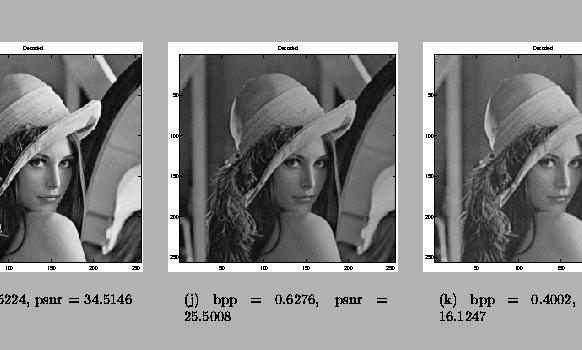

Figure:

Reconstructed images and the performance.

Figure:

Reconstructed images and the performance.

|

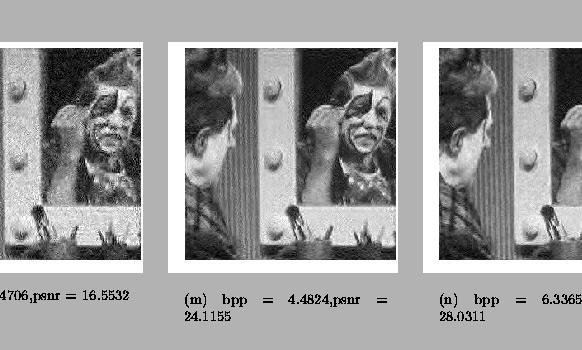

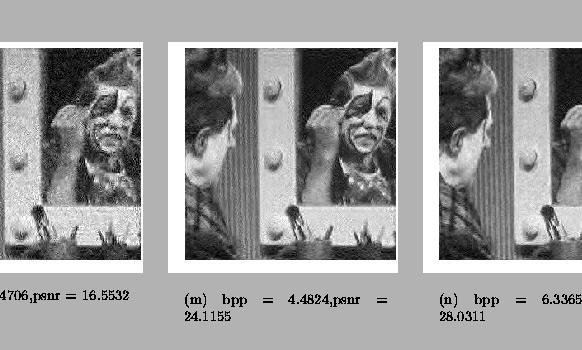

Figure:

Reconstructed images and the performance.

Figure:

Reconstructed images and the performance.

|

Note that best transform method over almost all rates was the

Haar Wavelet transform. This was extremely surprising given the ad hoc method used

to determine the quantization size for each block. However, we believe that this is

due to the storage of the minimum and maximum values of each block for decompression.

In this way we were able to keep our interval size as small as possible for the cost

of a bit of overhead. Thus, the uniform reconstruction interpolator was able to better

approximate the original transformed values. This is especially important when you

consider the smallest block in the upper left quadrant. We stated above that accuracy in

this block is extremely valuable due to the high amount of information stored there.

Moreover, unlike all the other blocks, the elements in this block can never be negative.

So to assign a uniform maximum and minimum value to all blocks at this quantization level

would end up placing negative values in the upper left block. This would give tremendous

error, as was seen during experimentation. So the tighter bounds we get with stored block

minima and maxima give much lower MSE for a small cost to the Rate. Notice, however, that

as the compression gets very large, the overhead will add a lot to the MSE.

As for the DCT, MSE increased rapidly as the BPP decreased. However, we managed to keep the edges of the image at all times. What degraded was not a blurring of the edges, but an overall contrast loss. As the BPP decreased, the image continuously lost the dark colors it originally had. So at very high compression, we could still tell what the image is but it seemed to be covered by a layer of fog. Something else we noticed was that a high MSE does not necessarily correspond to a bad image. We actually saw this with all three compression methods. The quality of the image was related more to the mask placement than BPP. This suggests that adaptively creating the mask as we encode the image would give us better quality.

Next: Bibliography

Up: Image Compression Using Transformations

Previous: Experimental Results

Andrew Doran

Cherry Wang

Huipin Zhang

1999-04-14

![[*]](cross_ref_motif.gif) ).

In our experiments this fact is proved by our three different algorithms.

However, we still can see some jumps in the curves. The main reason

for this is that using more bits does

not necessarily mean a better reconstruction, since reconstruction is

highly related to how we quantize our transformed data to get our bit

stream. If we design a wrong quantization table, such as assigning

more bits for small coefficients and less bits for

large coefficients, then the reconstruction will be very

much dependent the length of the bits stream.

Although we believe that most of the time this design of the quantization tables

is reasonable, the quantization mask does not necessarily

match the block information for every block to be encoded. For EZW algorithm, we have a

similar explanation. For example, if we uniformly quantize 15 in the

range of 0 and 32 with one quantization level, then the reconstruction

value is 16, which is a good approximation. If we quantize

it in the same range with two level quantization, a better reconstruction

value should be obtained, however, what we get is 8, which is an even

worse approximation. This is the reason why in the EZW algorithm

with more bits, meaning more level quantization, we don't necessarily

get better reconstruction.

).

In our experiments this fact is proved by our three different algorithms.

However, we still can see some jumps in the curves. The main reason

for this is that using more bits does

not necessarily mean a better reconstruction, since reconstruction is

highly related to how we quantize our transformed data to get our bit

stream. If we design a wrong quantization table, such as assigning

more bits for small coefficients and less bits for

large coefficients, then the reconstruction will be very

much dependent the length of the bits stream.

Although we believe that most of the time this design of the quantization tables

is reasonable, the quantization mask does not necessarily

match the block information for every block to be encoded. For EZW algorithm, we have a

similar explanation. For example, if we uniformly quantize 15 in the

range of 0 and 32 with one quantization level, then the reconstruction

value is 16, which is a good approximation. If we quantize

it in the same range with two level quantization, a better reconstruction

value should be obtained, however, what we get is 8, which is an even

worse approximation. This is the reason why in the EZW algorithm

with more bits, meaning more level quantization, we don't necessarily

get better reconstruction.