Next: Conclusions

Up: Image Compression Using Transformations

Previous: An example

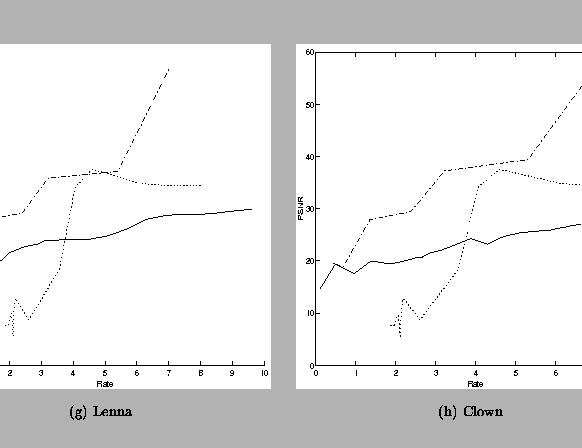

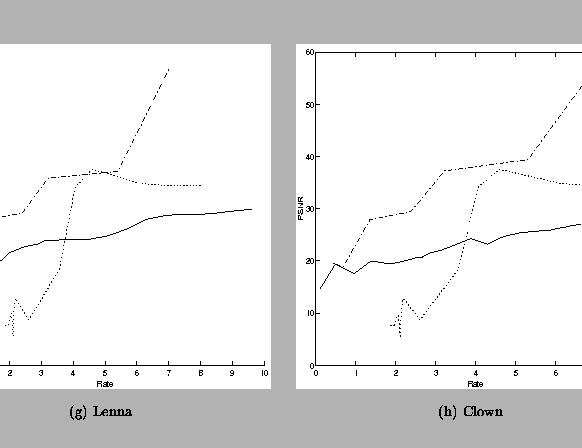

To test our three algorithms we try to compress the standard

images Lenna and Clown. (See Figures

![[*]](cross_ref_motif.gif) and

and ![[*]](cross_ref_motif.gif) ).

For the DCT based compression algorithm we determined values for

the PSNR of the compressed images for various compression rates in order

to measure the performance of our coders. In

order to do this, we had to generate a bit allocation mask for each

desired compression rate. For the case of the wavelet transformation

we developed a function (mask.m) to create masks at the compression

rates of: 1, 75, .675, .5, .25, .125, and .0625. We used a slightly

more ad hoc method for developing masks in the case of the DCT. Also,

for the Haar DWT, we stopped transforming the input image at a minimum

block size of

.

We

then measured the MSE of the compressed images, and calculated the

PSNR from that. We based The bits-per-pixel for each image on the

file size of the gzipped compressed image. The results of this

experiment performed on both the lenna image and the clown image can

be seen in Figure

).

For the DCT based compression algorithm we determined values for

the PSNR of the compressed images for various compression rates in order

to measure the performance of our coders. In

order to do this, we had to generate a bit allocation mask for each

desired compression rate. For the case of the wavelet transformation

we developed a function (mask.m) to create masks at the compression

rates of: 1, 75, .675, .5, .25, .125, and .0625. We used a slightly

more ad hoc method for developing masks in the case of the DCT. Also,

for the Haar DWT, we stopped transforming the input image at a minimum

block size of

.

We

then measured the MSE of the compressed images, and calculated the

PSNR from that. We based The bits-per-pixel for each image on the

file size of the gzipped compressed image. The results of this

experiment performed on both the lenna image and the clown image can

be seen in Figure ![[*]](cross_ref_motif.gif) .

.

For EZW algorithm the quantization is implicitly

implemented after each subordinate pass, so the quantization table

is actually related to the size of the bit stream for reconstruction.

To do the experiment we first generate a bit stream which can

be used for perfect reconstruction of the image.

Then based on our desired compression ratio we can determine

how many bits we need for reconstruction. Then we truncate

it to the right size, and the image is reconstructed

based on the truncated bits. Since the EZW is a progressive

coding algorithm, the bit stream of any size will construct an

approximation of the original image.

Figure:

PSNR vs bite rate for Lenna and Clown. The solid

curve is obtained using EZW algorithm, the dotted curve is obtained

using DCT transform and the dashdot curve is obtained using wavelet transform

together with specifically designed bit map.

Figure:

PSNR vs bite rate for Lenna and Clown. The solid

curve is obtained using EZW algorithm, the dotted curve is obtained

using DCT transform and the dashdot curve is obtained using wavelet transform

together with specifically designed bit map.

|

Next: Conclusions

Up: Image Compression Using Transformations

Previous: An example

Andrew Doran

Cherry Wang

Huipin Zhang

1999-04-14

![[*]](cross_ref_motif.gif) and

and ![[*]](cross_ref_motif.gif) ).

For the DCT based compression algorithm we determined values for

the PSNR of the compressed images for various compression rates in order

to measure the performance of our coders. In

order to do this, we had to generate a bit allocation mask for each

desired compression rate. For the case of the wavelet transformation

we developed a function (mask.m) to create masks at the compression

rates of: 1, 75, .675, .5, .25, .125, and .0625. We used a slightly

more ad hoc method for developing masks in the case of the DCT. Also,

for the Haar DWT, we stopped transforming the input image at a minimum

block size of

.

We

then measured the MSE of the compressed images, and calculated the

PSNR from that. We based The bits-per-pixel for each image on the

file size of the gzipped compressed image. The results of this

experiment performed on both the lenna image and the clown image can

be seen in Figure

).

For the DCT based compression algorithm we determined values for

the PSNR of the compressed images for various compression rates in order

to measure the performance of our coders. In

order to do this, we had to generate a bit allocation mask for each

desired compression rate. For the case of the wavelet transformation

we developed a function (mask.m) to create masks at the compression

rates of: 1, 75, .675, .5, .25, .125, and .0625. We used a slightly

more ad hoc method for developing masks in the case of the DCT. Also,

for the Haar DWT, we stopped transforming the input image at a minimum

block size of

.

We

then measured the MSE of the compressed images, and calculated the

PSNR from that. We based The bits-per-pixel for each image on the

file size of the gzipped compressed image. The results of this

experiment performed on both the lenna image and the clown image can

be seen in Figure ![[*]](cross_ref_motif.gif) .

.